2005-10-15 19:13:00

Scalability Story

Here at SourceGear, I wear several different hats. One of my roles is that I'm "the guy who talks to the prospective Vault customers with large teams". If you've got a team of 25 people, you can simply come to our online store and buy the product. But if you want to use Vault for a team of 300 people, I want to have a conversation with you.

Don't call me a Sales Guy or any other vulgar names. I don't make cold calls. I don't pester anyone. I just make myself available to talk with larger customers who are trying to make a decision about Vault. I like to understand the customer's situation, make sure Vault is the right choice, and help ensure a smooth transition.

#ifdef marketing_digression

Sometimes I say "no". Earlier this year, I had a conversation with a prospective Vault customer who wanted to put several thousand users on a single Vault server. In this case, saying "no" was the right decision. That was a perfect example of a customer we don't want.

Right now you might be thinking how ridiculous that sounds. Don't we want every customer we can get?

Actually, good marketing usually results in a clear understanding of which customers you want and which customers you don't. It's very difficult to be the best product for your target niche if you are not genuinely prepared to say "no" to everybody who is outside that niche. If you cannot easily identify the customers that you don't want, then you probably need to give your strategy more thought.

Consider McDonald's. They know what they do, and they do it very well.

Suppose a customer comes in to McDonald's and says, "I'd like a burger and fries and a pint of Guinness and I want to be seated at a table in the smoking section with a big-screen TV so I can watch the game." Does the manager worry about turning away a customer? Perhaps he goes back to his office and wonders if the entire McDonalds strategy is all wrong?

Certainly not. He simply tells the customer no: "That's not what we do here."

And so it is with SourceGear as well. Our products are designed to meet the needs of the software teams in the professional segment, extending up somewhat into the small enterprise. Most of our sites have less than 100 developers. Our larger sites have teams numbering in the hundreds, not in the thousands. Customers with 5,000 developers tend to have a very different class of needs. That's not what we do here.

#endif

Anyway, since I am often talking to our bigger customers, I am often thinking about Vault's level of scalability.

Scalability

I define "scalability" as "the ability of a software system to cope as the size of the problem increases".

Note that scalability and performance are related, but different. Explaining these terms in the language of calculus, if performance is a function, scalability is about the first derivative of that function. A piece of software can be very scalable, even if its performance is poor for small values of n. For example, I've heard people describe Exchange that way. Apparently an Exchange server with 5 users is kind of slow, but an Exchange server with 5,000 users is no slower. (I've never used Exchange so I can't really say if that's true.)

When I say "the size of the problem", I am speaking very generally. Continuing my notion of a mathematical curve, scalability can involve different variables on the X axis. A product might scale very well for large numbers of users but very poorly for large quantities of data. A database might scale very well for large numbers of rows but very poorly when the individual rows are large.

Finally, let's not confine scalability to things which can be measured with a stopwatch. Sometimes scalability problems are a bit more qualitative. For example, the Vault Admin tool presents the list of users in a regular Windows listbox control. That's fine with 100 users, but it's not exactly the right UI for a system with 5,000 users.

Scalability Issues for Version Control Tools

Thousands of teams are using Vault, and the vast majority of them are very happy. However, we've had a few customers over the years who have struggled with Vault, and scalability has been a common theme. It turns out that for a version control system, scalability can bring some very challenging problems.

Simple things become grotesque. For example, one of the fundamental things a version control system must do is figure out if the user has made any modifications to their local copies of the files. Sounds simple, right? It mostly is. But if you implement a simple solution and test it on a tree with 100 files, you will probably find later that it doesn't work quite so well when somebody tries it on their tree with 25,000 files.

Back in the days of Vault 1.0, we were courting a certain banner name customer who was interested in migrating from SourceSafe to Vault. We spent a lot of time and effort, including trips to their location. In the end, we lost the deal, largely because our product just didn't work very well for a team their size. This team had 75 developers working on a tree with around 70,000 files. Today, Vault 3.1 can usually handle problems like that without breaking a sweat.

The thing about source control is that there are so many different scalability factors to consider. Some people have lots of users. Some have lots of files. Some have really big files. Some teams lock thousands of files even if they don't plan to edit them. The variable on the X axis varies from customer to customer.

We have worked to make our product more scalable with every release. In fact, Vault 3.1 was a major step forward from Vault 3.0. Since I played a part in this, I would like to tell the story of how it happened. I'm mostly just a marketing guy these days, so on the all-too-rare occasion that I break out Visual Studio for some development work, I want to talk about it. :-)

We love automated testing

We are big believers in automated testing. We've got unit tests and "smoke tests" that run on every build. We've got a test repository with 500,000 files in it. We've got tests that randomly retrieve old versions to make sure they're still okay. We have a "Combinatorial Test" which randomly performs weird operations and finds ridiculously arcane edge cases. We like automated test apps. Every so often we write another one.

During the 3.1 development cycle, I was feeling bored and a little ornery. I didn't have much involvement in the 3.1 code, and I was itching to use a compiler, so I took some time and wrote a test application we call "Crowd Test". My goal was to exercise the Vault server under seriously heavy load from many simultaneous users. I wanted Crowd Test to be sadistic and cruel and unfair.

How it Works

Crowd Test is a Vault client which runs in an infinite loop that looks something like this:

- Randomly choose an action to perform.

- Sleep for a random amount of time.

- Go back to step 1.

The list of known actions includes most everything that can be done to a Vault server. For example, there are actions to add files, create folders, delete things, run history queries, and apply labels.

The algorithm which randomly selects an action uses weights so that some actions are more likely to happen than others. The weights are tuned to roughly simulate the usage pattern of real users. For example, modifying a file is more likely to happen than deleting a folder or creating a branch.

We usually set the sleep time fairly low, averaging around 30 seconds between actions. , Real users don't perform a source control operation that often. However, with a low idle time between actions, when we run a simple test with say 10 Crowd Test clients simultaneously, we are actually burdening the server with a load that is higher than that which would be caused by 10 human users.

The actions are also tuned to ensure that the size of the repository is always increasing. The longer Crowd Test runs, the larger the repository gets.

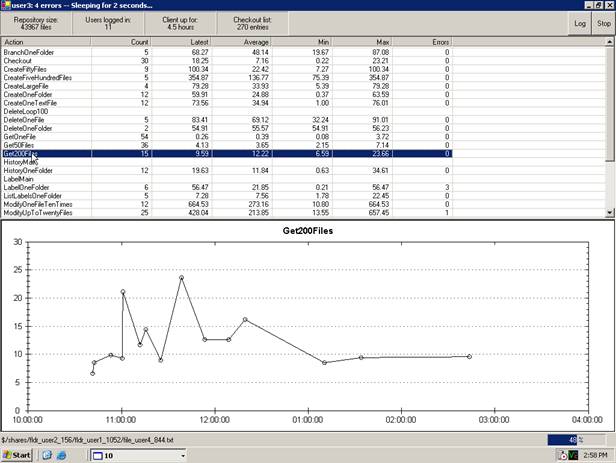

Each Crowd Test client records the elapsed time for every action and plots the resulting data with simple line graphs. In this way, we can get a visual depiction of just how the performance of each operation changes as the repository grows. (BTW, for the plotting I used ZedGraph, which is very cool.)

Here's a screen shot of a Crowd Test client:

I spent a couple of weeks writing the initial version of Crowd Test. All of my early test runs took place on my main development machine. I remember running one Crowd Test client overnight, returning the next morning to find 14 hours of results, nicely graphed. Everything was going exactly as planned, so I was ready to try a test with multiple clients hitting a real server. For my test server machine, I grabbed a dual-P3 with a gig of RAM, not too beefy, not too wimpy.

I had high expectations. Nobody had ever tortured a Vault server this badly before! I started a bunch of Crowd Test clients and went to lunch. After lunch, I would start examining the graphs and beginning identifying all the tweaks which could be made to improve scalability.

The actual results were not at all what we expected. When I designed Crowd Test to be sadistic, I succeeded.

Nightmare on Farber Street

The Vault server died before I ever got my chicken sandwich. When I returned from lunch, I discovered that the server was FUBAR not long after starting the test. From that point on, it was still listening to requests but it was immediately returning failure codes for every action.

I stopped the test and started looking for some kind of configuration problem. At first, I didn't really consider the possibility that I had found a bug in the server. But the more I investigated, the more horrified I became. Within a couple days, the truth was painfully clear: Vault 3.0 had a terrible problem.

I recruited some help from Jeff (a Vault server developer) and we got that bug fixed in reasonably short order. Embarrassingly, it was an issue of thread safety. During the development of Vault 3.0, we did several things to increase concurrency from 2.0. Apparently we left a couple of places where the server wasn't thread-safe. Under typical usage, the problem rarely if ever appeared, but Crowd Test created conditions which made this bug appear in minutes. (This problem was fixed for 3.0 users with the 3.0.7 maintenance release.)

We returned to the Crowd Test effort, assuming that we were now ready to proceed with the fine tuning and optimization work. Once again, things didn't work out the way we expected.

I'll spare you the play calling and give you the box score: We dragged Ian (another Vault server developer) into our little team, and the three of us spent most of April, May and June working with Crowd Test. Mostly I stayed on the client side and let the other two guys do all the server work, but I still ended up learning a whole lot more about SQL Server than I ever wanted to know. We found and fixed a whole bunch of problems, including the Big Ugly Thread Concurrency Bug and a Huge Memory Leak and a Bunch of Deadlocks in the SQL Layer and a Big Performance Problem with Folder Security Checks and more.

I don't know if this experience really fits the definition of "Test Driven Development", but we were definitely feeling "driven" by Crowd Test. :-)

It was bittersweet experience. On the one hand, we were horrified each time we discovered another hideous scalability problem in our shipping product. On the other hand, the improvement we were making in the quality of Vault was incredible.

The Bottom Line

Fortunately, the actual negative impact of all the problems we found was rather small. None of our customers abuse their Vault server as badly as Crowd Test does. Our product was taxing the patience of some customers, but nobody lost any data or anything like that. Most users were completely unaffected.

On the positive side, as our larger sites upgrade to 3.1, they are getting much happier.

The efforts we started here will continue. In fact, as I was proofreading a final draft of this article, Ian walked in and asked for more hardware for Crowd testing. :-)

That's good, because we will probably always have more work to do. For example, in Vault 4.0 we really need to speed up the branch command. It's not a problem for most users, but we've got one medium-sized customer whose development process has them creating branches extremely often, like every day. Our branching design is sound, but the implementation was done with the assumption of it being a rather infrequent operation. A bit of localized surgery on the branch command will help a lot.

Today Vault scalability is very good, better than it has ever been. When I talk with prospective customers, I've got more confidence than ever, but the journey is a little bumpy sometimes.

These experiences allow me to feel a certain measure of identification with the developers working on Team Foundation Server at Microsoft. They've accomplished a lot already, but to some extent they are just now embarking on this journey, especially because their scalability goals aim much higher. Unlike SourceGear, the Team System folks actually do want to create a product which is well suited to teams with 5,000 developers. Perhaps more importantly, I daresay they want their product to eventually be used throughout Microsoft itself, especially on the massive teams which develop Office and Windows. With their 1.0 release apparently only a few months away, they have some very interesting times ahead of them. Best wishes and a pleasant journey to Brian Harry and his team.

So Eric, are you trying to make a point here?

Nah, not really. I'm just telling stories. I believe in transparency.